Tags

"Russian Interference in the 2016 United States Election”, Facebook, fake accounts, fake news, Hillary devil, Instagram, junk news, Russian backed pages, Social Media hearings, twitter, US Senate Intel Committee

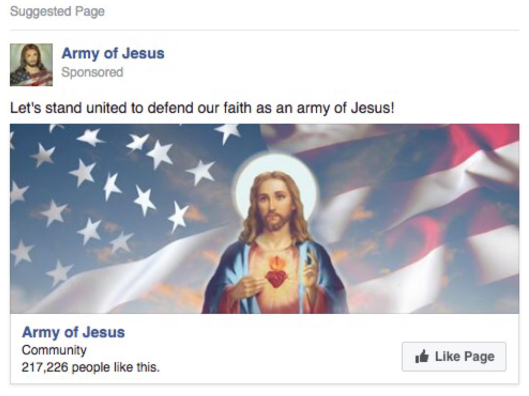

“Investigating the Role of Ads and Foreign Interference. After the 2016 election, we learned from press accounts and statements by congressional leaders that Russian actors might have tried to interfere in the election by exploiting Facebook’s ad tools. This is not something we had seen before, and so we started an investigation that continues to this day. We found that fake accounts associated with the IRA spent approximately $100,000 on more than 3,000 Facebook and Instagram ads between June 2015 and August 2017. Our analysis also showed that these accounts used these ads to promote the roughly 120 Facebook Pages they had set up, which in turn posted more than 80,000 pieces of content between January 2015 and August 2017. The Facebook accounts that appeared tied to the IRA violated our policies because they came from a set of coordinated, inauthentic accounts. We shut these accounts down and began trying to understand how they misused our platform.” Excerpt from the Statement of Facebook VP and General Counsel Colin Stretch. Read more here: https://www.intelligence.senate.gov/sites/default/files/documents/os-cstretch-110117.pdf

Who is Hillary fighting? Is that Jared Kushner?

“Opening Statement of Vice Chairman Warner from Senate Intel Open Hearing with Social Media Representatives Nov 01 2017

As Prepared for Delivery

In this age of social media, you can’t afford to waste too much time – or too many characters – in getting the point across, so I’ll get straight to the bottom line.

Russian operatives are attempting to infiltrate and manipulate social media to hijack the national conversation and to make Americans angry, to set us against ourselves, and to undermine our democracy. They did it during the 2016 U.S. presidential campaign. They are still doing it now. And not one of us is doing enough to stop it.

That is why we are here today.

In many ways, this threat is not new. Russians have been conducting information warfare for decades.

But what is new is the advent of social media tools with the power to magnify propaganda and fake news on a scale that was unimaginable back in the days of the Berlin Wall. Today’s tools seem almost purpose-built for Russian disinformation techniques.

Russia’s playbook is simple, but formidable. It works like this:

1. Disinformation agents set up thousands of fake accounts, groups and pages across a wide array of platforms.

2. These fake accounts populate content on Facebook, Instagram, Twitter, YouTube, Reddit, LinkedIn, and others.

3. Each of these fake accounts spend months developing networks of real people to follow and like their content, boosted by tools like paid ads and automated bots. Most of their real-life followers have no idea they are caught up in this web.

4. These networks are later utilized to push an array of disinformation, including stolen emails, state-led propaganda (like RT and Sputnik), fake news, and divisive content.

The goal here is to get this content into the news feeds of as many potentially receptive Americans as possible and to covertly and subtly push them in the direction the Kremlin wants them to go.

As one who deeply respects the tech industry and was involved in the tech business for twenty years, it has taken me some time to really understand this threat. Even I struggle to keep up with the language and mechanics. The difference between bots, trolls, and fake accounts. How they generate Likes, Tweets, and Shares. And how all of these players and actions are combined into an online ecosystem.

What is clear, however, is that this playbook offers a tremendous bang for the disinformation buck.

With just a small amount of money, adversaries use hackers to steal and weaponize data, trolls to craft disinformation, fake accounts to build networks, bots to drive traffic, and ads to target new audiences. They can force propaganda into the mainstream and wreak havoc on our online discourse. That’s a big return on investment.

So where do we go from here?

It will take all of us – the platform companies, the United States government, and the American people – to deal with this new and evolving threat.

The social media and innovative tools each of you have developed have changed our world for the better.

You have transformed the way we do everything from shopping for groceries to growing our small businesses. But Russia’s actions are further exposing the dark underbelly of the ecosystem you have created. And there is no doubt that their successful campaign will be replicated by other adversaries – both nation states and terrorists – that wish to do harm to democracies around the globe.

As such, each of you here today needs to commit more resources to identifying bad actors and, when possible, preventing them from abusing our social media ecosystem.

Thanks in part to pressure from this Committee, each company has uncovered some evidence of the ways Russians exploited their platforms during the 2016 election.

For Facebook, much of the attention has been focused on the paid ads Russian trolls targeted to Americans. However, these ads are just the tip of a very large iceberg. The real story is the amount of misinformation and divisive content that was pushed for free on Russian-backed pages, which then spread widely on the News Feeds of tens of millions of Americans.

According to data Facebook has provided, 120 Russian-backed Pages built a network of over [3.3] million real people.

From these now-suspended Pages, 80,000 organic unpaid posts reached an estimated 126 million real people. That is an astonishing reach from just one group in St. Petersburg. And I doubt that the so-called Internet Research Agency represents the only Russian trolls out there. Facebook has more work to do to see how deep this goes, including looking into the reach of the IRA-backed Instagram posts, which represent another 120,000 pieces of content – more Russian content on Instagram than even Facebook.

The anonymity provided by Twitter and the speed by which it shares news makes it an ideal tool to spread disinformation.

According to one study, during the 2016 campaign, junk news actually outperformed real news in some battleground states in the lead-up to Election Day.[1] Another study found that bots generated one out of every five political messages posted on Twitter over the entire presidential campaign.[2]

I’m concerned that Twitter seems to be vastly under-estimating the number of fake accounts and bots pushing disinformation. Independent researchers have estimated that up to 15% of Twitter accounts – or potentially 48 million accounts – are fake or automated.[3]

Despite evidence of significant incursion and outreach from researchers, Twitter has, to date, only uncovered a small percentage of that activity. Though, I am pleased to see, Twitter, that your number has been rising in recent weeks.

Google’s search algorithms continue to have problems in surfacing fake news or propaganda. Though we can’t necessarily attribute to the Russian effort, false stories and unsubstantiated rumors were elevated on Google Search during the recent mass shooting in Las Vegas. Meanwhile, YouTube has become RT’s go-to platform. Google has now uncovered 1100 videos associated with this campaign. Much more of your content was likely spread through other platforms.

It is not just the platforms that need to do more. The U.S. government has thus far proven incapable of adapting to meet this 21st century challenge. Unfortunately, I believe this effort is suffering, in part, because of a lack of leadership at the top. We have a President who remains unwilling to acknowledge the threat that Russia poses to our democracy. President Trump should stop actively delegitimizing American journalism and acknowledge this real threat posed by Russian propaganda.

Congress, too, must do more. We need to recognize that current law was not built to address these threats. I have partnered with Senators Klobuchar and McCain on a light-touch, legislative approach, which I hope my colleagues will review.

The Honest Ads Act is a national security bill intended to protect our elections from foreign influence.

Finally – but perhaps most importantly – the American people also need to be aware of what is happening on our news feeds. We all need to take a more discerning approach to what we are reading and sharing, and who we are connecting with online. We need to recognize that the person at the other end of that Facebook or Twitter argument may not be a real person at all.

The fact is that this Russian weapon has already proven its success and cost effectiveness.

We can be assured that other adversaries, including foreign intelligence operatives and potentially terrorist organizations, are reading their playbook and already taking action. We must act.

To our witnesses today, I hope you will detail what you saw in this last election and tell us what steps you will undertake to get ready for the next one. We welcome your participation and encourage your commitment to addressing this shared responsibility.

[1] Oxford Internet Institute (Phil Howard): “Social Media, News and Political Information During the U.S. Election: Was Polarizing Content Concentrated in Swing States?” (September 28, 2017);

[2] USC: “Social Bots Distort the 2016 U.S. Presidential election Online Discussion,” (November 2016)

[3] University of Southern California and Indiana University: “Online Human-bot Interactions: Detection, Estimation, and Characterization” (March 2017).”

https://www.warner.senate.gov/public/index.cfm/bloghome

See much more here: https://www.intelligence.senate.gov/hearings/open-hearing-social-media-influence-2016-us-elections

The NY Times has additional ads which aren’t at the links above – maybe in videos: “These Are the Ads Russia Bought on Facebook in 2016” https://nyti.ms/2z5Ei4W

You must be logged in to post a comment.